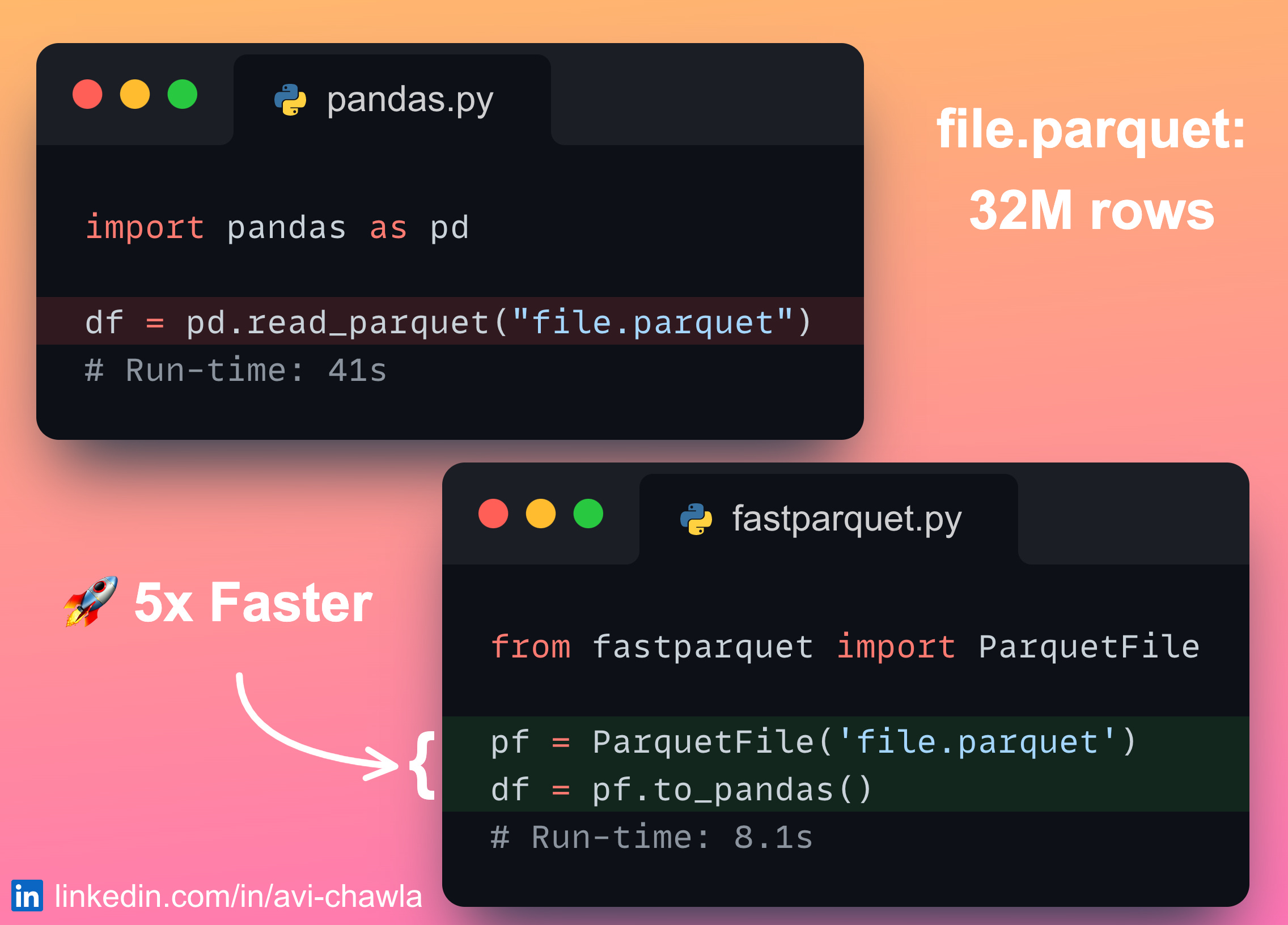

s3.read_parquet() uses more memory than the pandas read_parquet() · Issue #1198 · aws/aws-sdk-pandas · GitHub

How to easily load CSV, Parquet and Excel files in SageMaker using Pandas | by Nikola Kuzmic | Medium

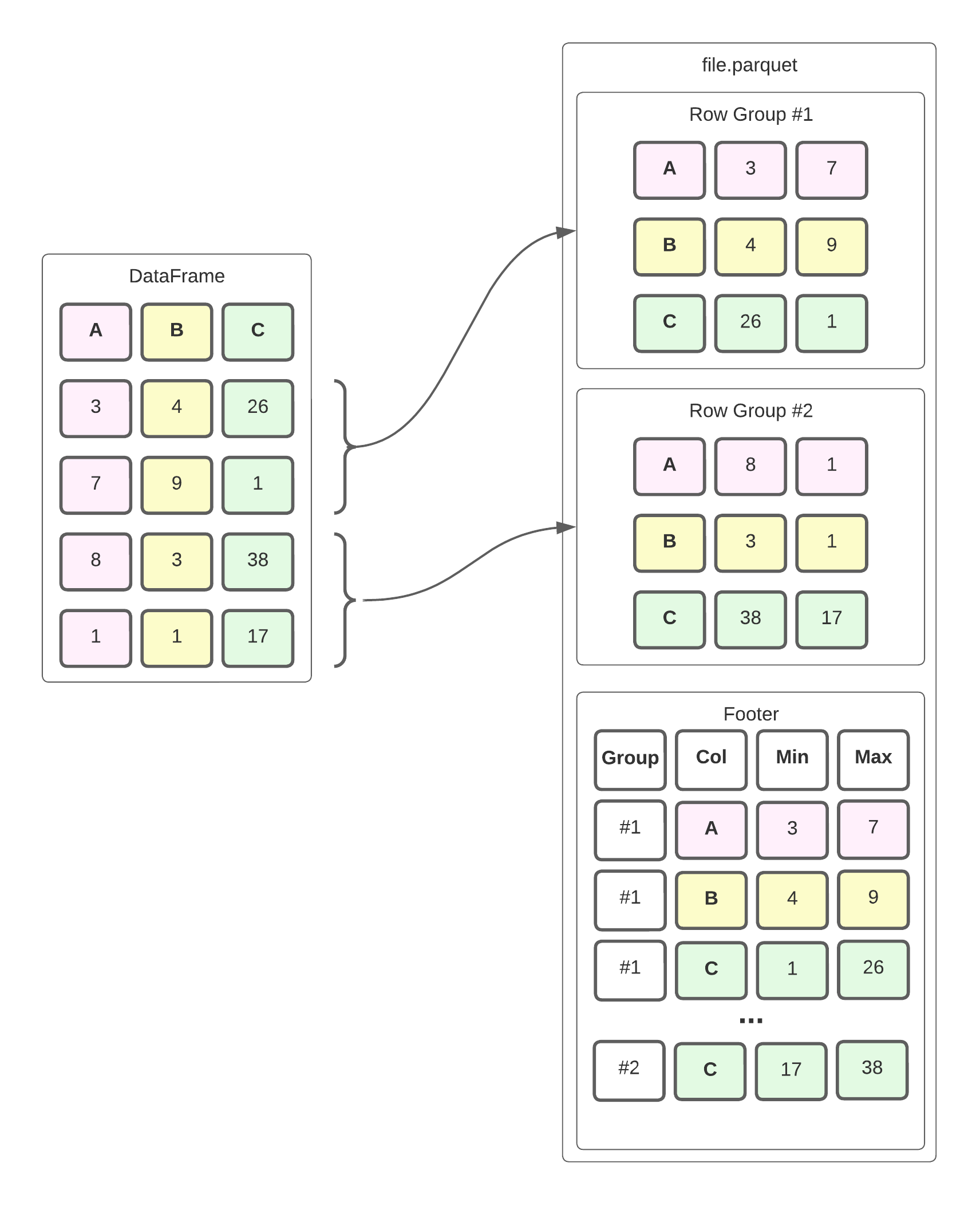

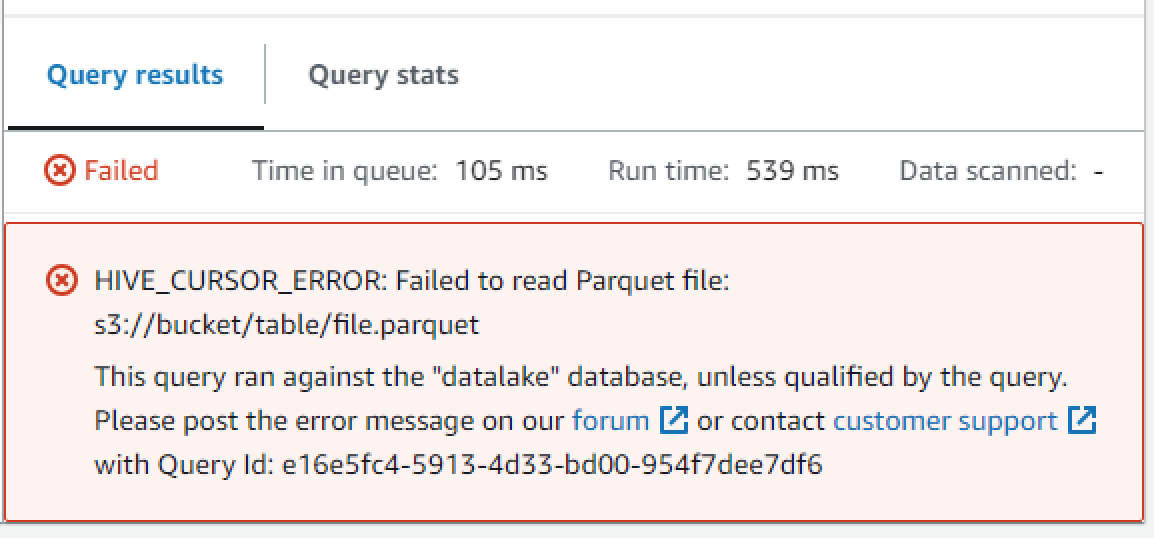

Push-Down-Predicates in Parquet and how to use them to reduce IOPS while reading from S3 | tecRacer Amazon AWS Blog

amazon s3 - Spark Streaming appends to S3 as Parquet format, too many small partitions - Stack Overflow

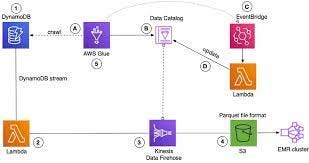

Serverless Conversions From GZip to Parquet Format with Python AWS Lambda and S3 Uploads | The Coding Interface

.jpg)