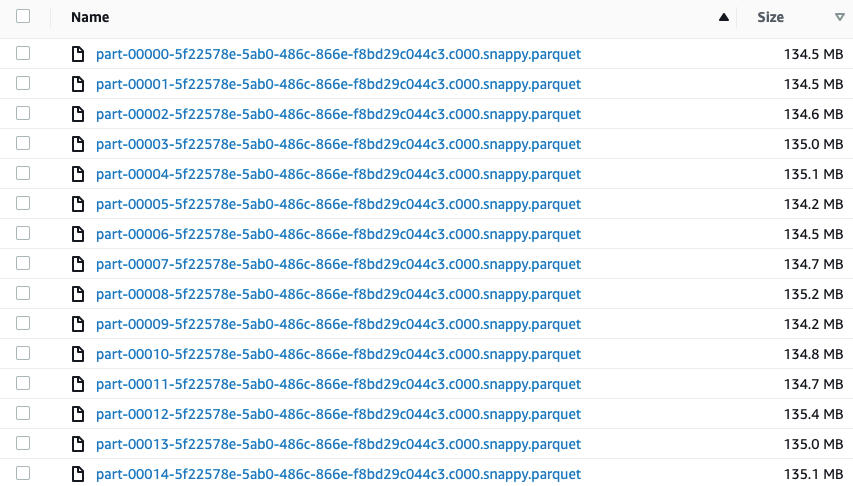

apache spark - Partition column is moved to end of row when saving a file to Parquet - Stack Overflow

apache spark - Partition column is moved to end of row when saving a file to Parquet - Stack Overflow

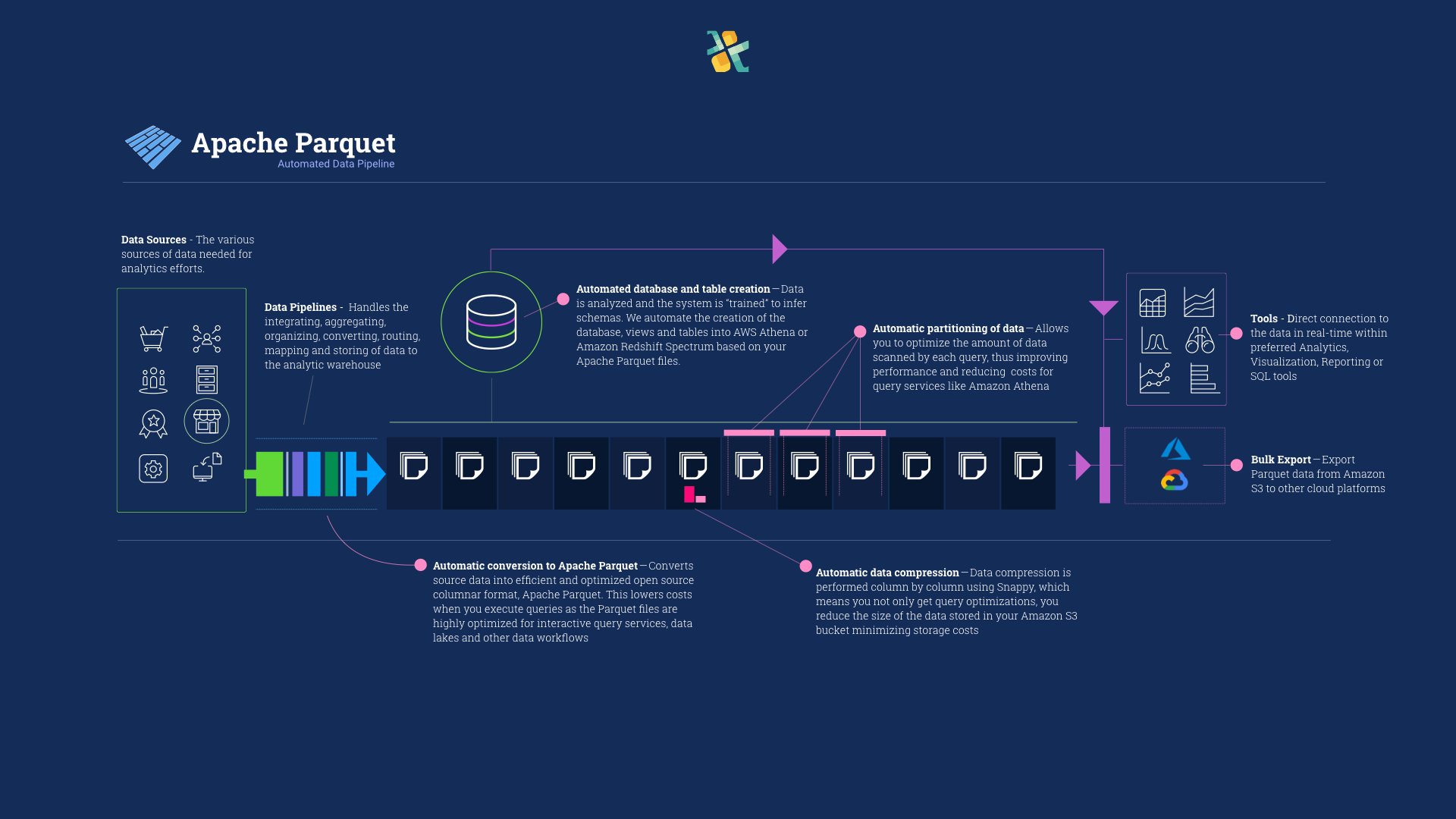

3 Quick And Easy Steps To Automate Apache Parquet File Creation For Google Cloud, Amazon, and Microsoft Azure Data Lakes | by Thomas Spicer | Openbridge

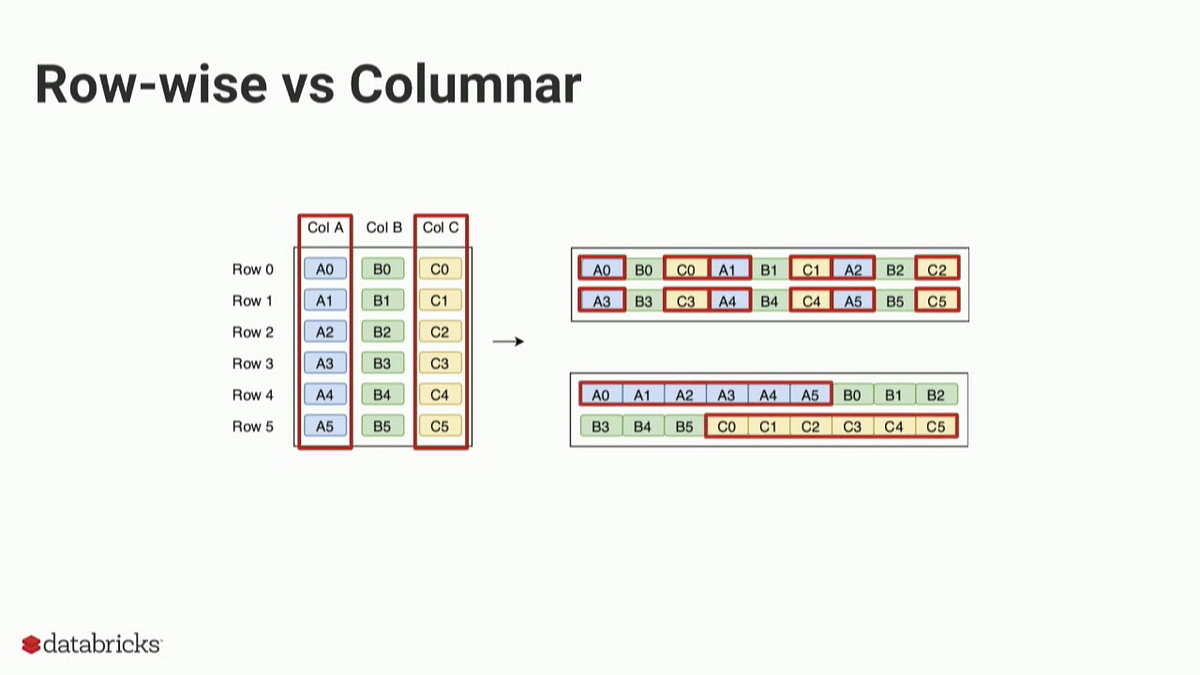

Python and Parquet performance optimization using Pandas, PySpark, PyArrow, Dask, fastparquet and AWS S3 | Data Syndrome Blog